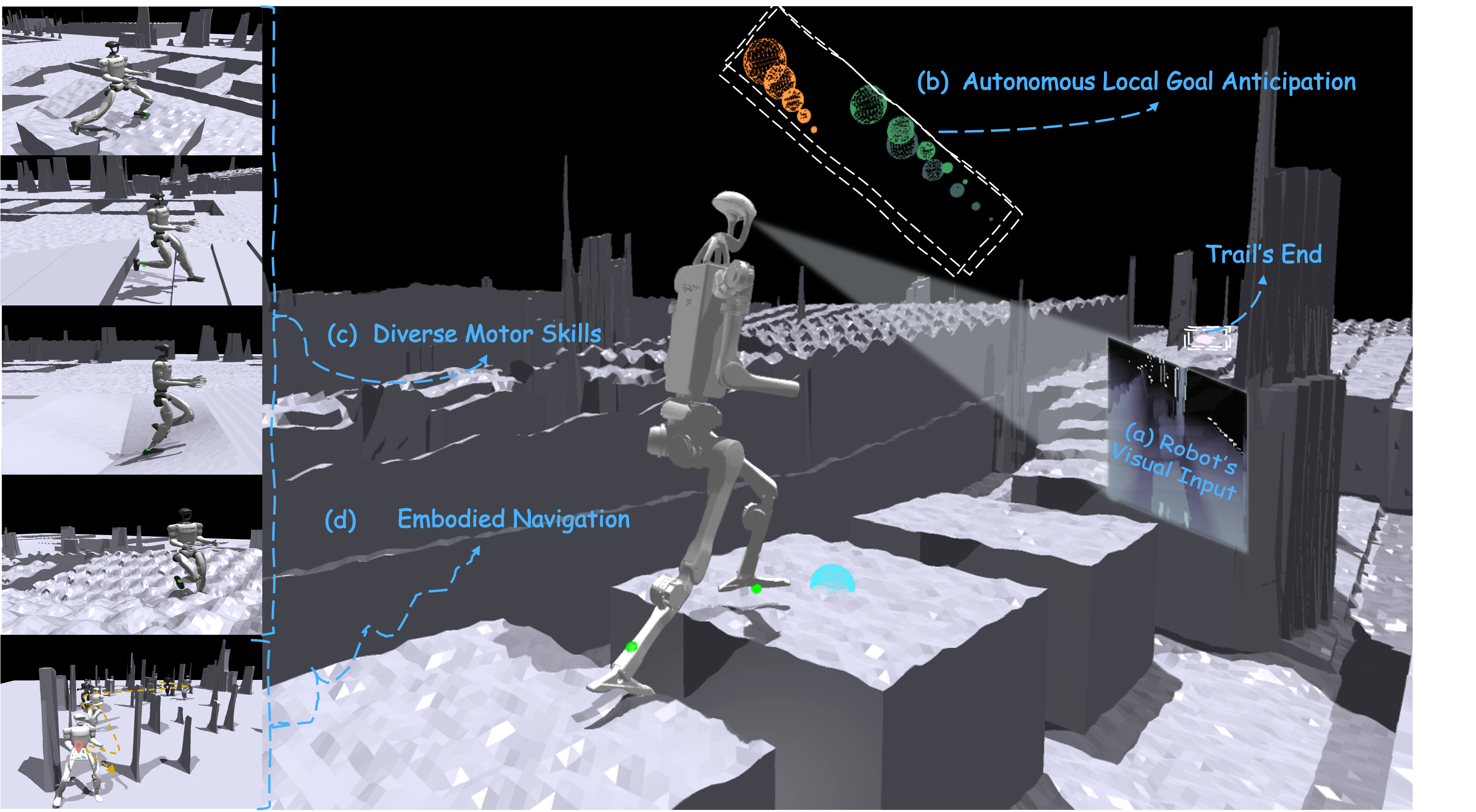

Hiking, challenges humans to master diverse motor skills and adapt to complex, and unpredictable terrain -- such as steep slopes, wide ditches, tangled roots, and sudden elevation changes. It demands continuous balance, agility, and real-time decision-making, making it an ideal testbed for advancing humanoid autonomy and the integration of vision, planning, and motor control. Hiking-capable robots could explore remote areas, assist in rescue missions, and guide individuals along rugged paths.

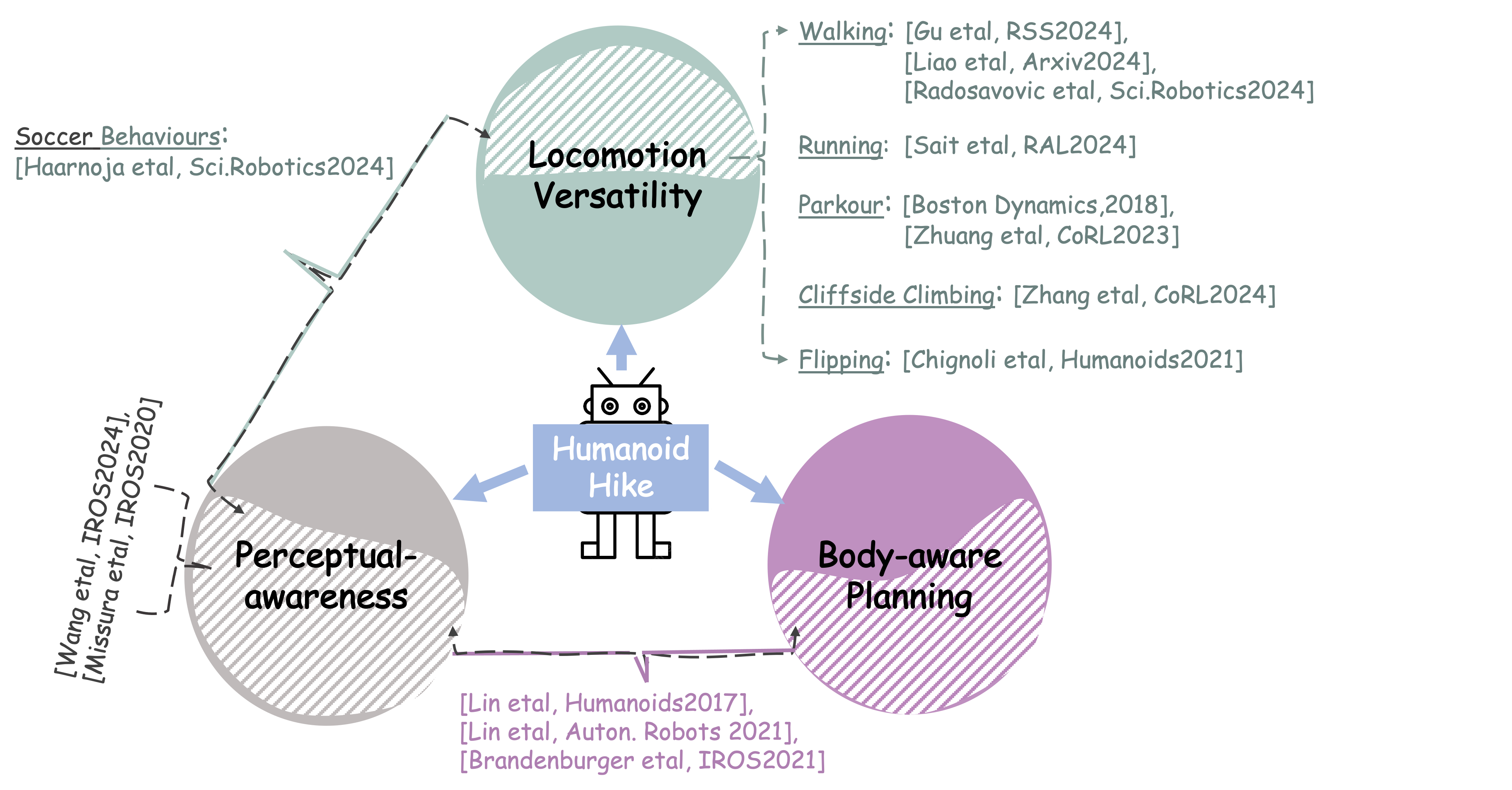

(1) Locomotion versatility. Hiking trails span a vast range of terrains, such as dirt, rocks, stairs, and streams, often coexisting on a single trail. Such complexity and dangerousness of environments demand that robots go beyond blind locomotion and basic skills like walking and running. They must autonomously adapt to environmental variations with dynamic skills like jumping and leaping while maintaining balance on mixed surfaces.

(2) Body awareness. Hiking introduces a local planning problem beyond traditional goal navigation -- real-time adjustments to navigate local obstacles, terrain changes, and body states. This requires seamless coordination between visual perception and motor control, enabling the robots to adaptively plan feasible foot placements and movements to immediate environmental or body state changes, as they progress through the trail.

(3) Perceptual awareness. Navigating complex 3D trails requires robots to sense and react to diverse obstacles, like stepping over logs or navigating around trees. Robots must leverage perceptual awareness from onboard sensors to dynamically select context-appropriate and agile actions, ensuring safe trail traversal.

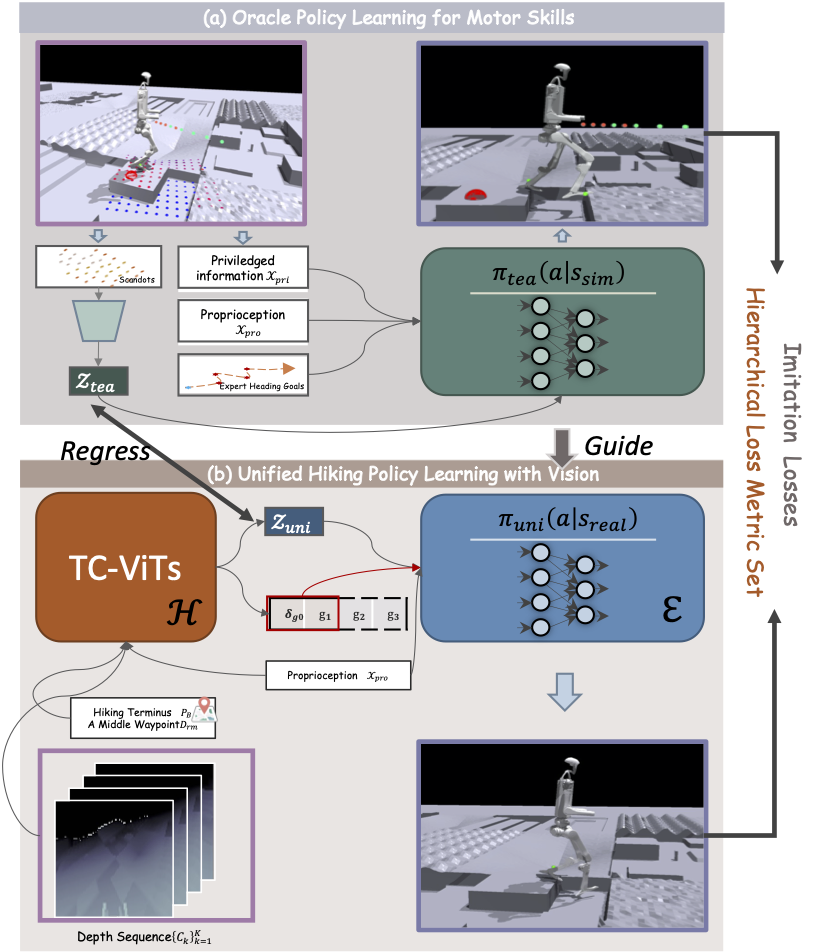

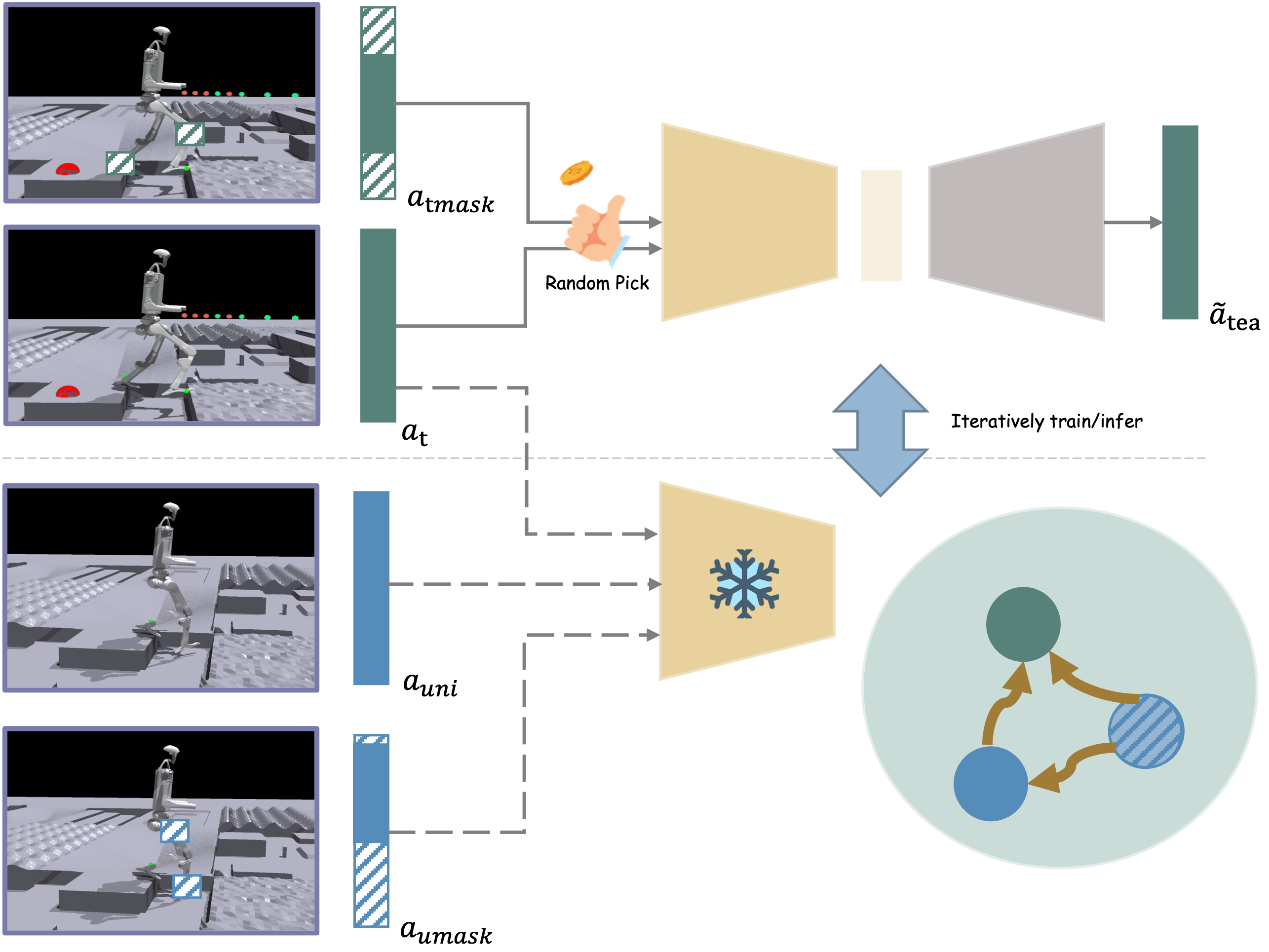

LEGO-H Framework Overview. LEGO-H equips humanoid robots with adaptive hiking skills by integrating navigation and locomotion in a unified, end-to-end learning framework (b). To foster the versatility of motor skills, we train the unified policy via privileged learning from oracle policy (a).

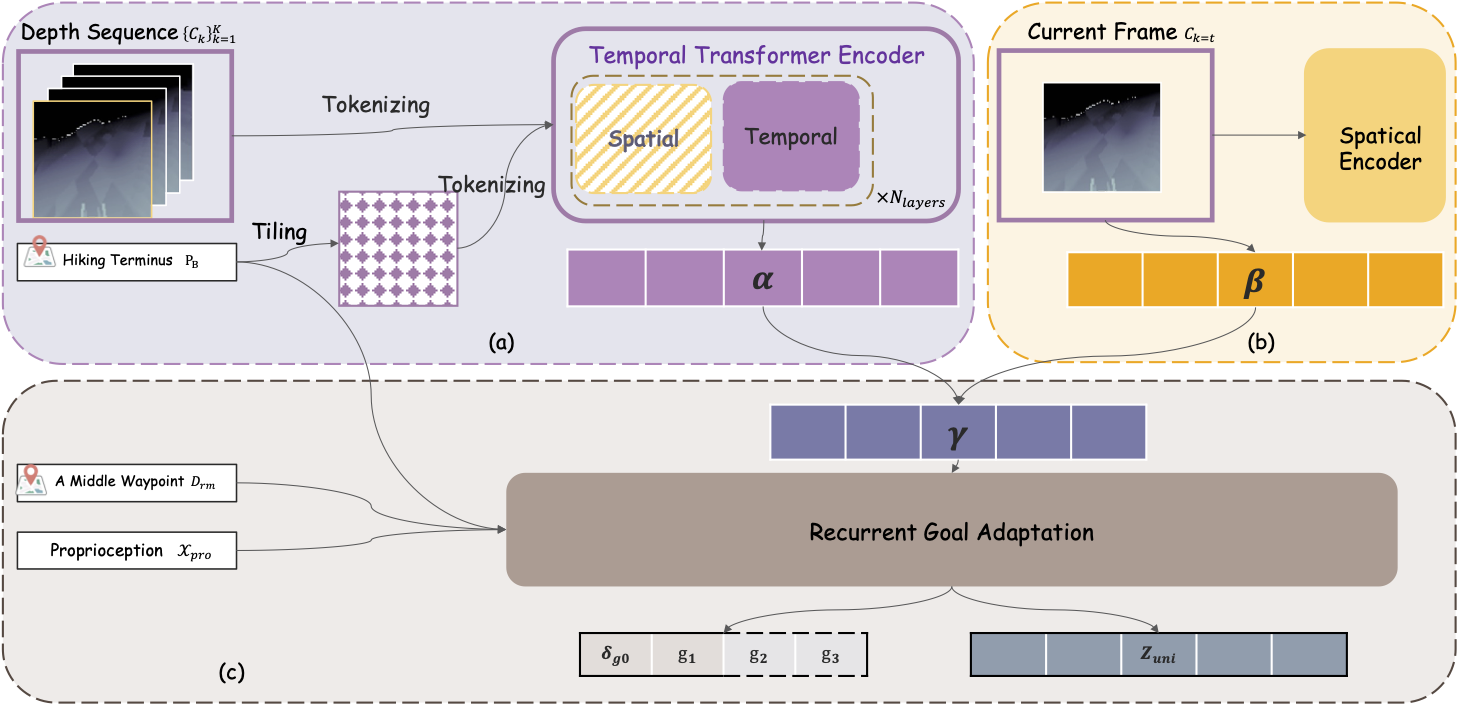

TC-ViT' Details. Three key components: (a) a goal-orientated temporal transformer encoder for robots cognizing surroundings with the final goal; (b) a dual process on the current depth frame for integrating spatially precise information to reflect the current state; (c) a recurrent goal adaptation mechanism that integrates visual awareness, goal information, and proprioception.

HLM' Details. HLM promotes the student robots to learn inter-joint dependencies and structural consistencies that align closely with the robot’s physical mechanism, rather than motion prior from human data.

All demo videos on this page showcase the results of LEGO-H's unified policy: robots autonomously navigating and executing motor skills using depth inputs and proprioception.

Website template modified from incredible UMI-On-Legs, NeRFies, Scaling Up Distilling Down, and AnyCar. This website is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.